As the global chip shortage let rip, many chip manufacturers have to slow or even halt semiconductor production. Makers of all kinds of electronics such as switches, PCs, servers are all scrambling to get enough chips in the pipeline to match the surging demand for their products. Every manufacturer, supplier and solution provider in datacenter industry is feeling the impact of the ongoing chip scarcity. However, relief is nowhere in sight yet.

What’s Happening?

Due to the rise of AI and cloud computing, datacenter chips have been a highly charged topic in recent times. As networking switches and modern servers, indispensable equipment in datacenter applications, use more advanced components than an average consumer’s PC, naturally when it comes to chip manufacturers and suppliers, data centers are given the top priority. However, with the demand for data center machines far outstripping supply, chip shortages may continue to be pervasive across the next few years. Coupled with economic uncertainties caused by the pandemic, it further puts stress on datacenter management.

According to a report from the Dell’Oro Group, robust datacenter switch sales over the past year could foretell a looming shortage. As the mismatch in supply and demand keeps growing, enterprises looking to buy datacenter switches face extended lead times and elevated costs over the course of the next year.

“So supply is decreasing and demand is increasing,” said Sameh Boujelbene, leader of the analyst firm’s campus and data-center research team. “There’s a belief that things will get worse in the second half of the year, but no consensus on when it’ll start getting better.”

Back in March, Broadcom said that more than 90% of its total chip output for 2021 had already been ordered by customers, who are pressuring it for chips to meet booming demand for servers used in cloud data centers and consumer electronics such as 5G phones.

“We intend to meet such demand, and in doing so, we will maintain our disciplined process of carefully reviewing our backlog, identifying real end-user demand, and delivering products accordingly,” CEO Hock Tan said on a conference call with investors and analysts.

Major Implications

Extended Lead Times

Arista Networks, one of the largest data center networking switch vendors and a supplier of switches to cloud providers, foretells that switch-silicon lead times will be extended to as long as 52 weeks.

“The supply chain has never been so constrained in Arista history,” the company’s CEO, Jayshree Ullal, said on an earnings call. “To put this in perspective, we now have to plan for many components with 52-week lead time. COVID has resulted in substrate and wafer shortages and reduced assembly capacity. Our contract manufacturers have experienced significant volatility due to country specific COVID orders. Naturally, we’re working more closely with our strategic suppliers to improve planning and delivery.”

Hock Tan, CEO of Broadcom, also acknowledged on an earnings call that the company had “started extending lead times.” He said, “part of the problem was that customers were now ordering more chips and demanding them faster than usual, hoping to buffer against the supply chain issues.”

Elevated Cost

Vertiv, one of the biggest sellers of datacenter power and cooling equipment, mentioned it had to delay previously planned “footprint optimization programs” due to strained supply. The company’s CEO, Robert Johnson, said on an earnings call, “We have decided to delay some of those programs.”

Supply chain constraints combined with inflation would cause “some incremental unexpected costs over the short term,” he said, “To share the cost with our customers where possible may be part of the solution.”

“Prices are definitely going to be higher for a lot of devices that require a semiconductor,” says David Yoffie, a Harvard Business School professor who spent almost three decades serving on the board of Intel.

Conclusion

There is no telling that how the situation will continue playing out and, most importantly, when supply and demand might get back to normal. Opinions vary on when the shortage will end. The CEO of chipmaker STMicro estimated that the shortage will end by early 2023. Intel CEO Patrick Gelsinger said it could last two more years.

As a high-tech network solutions and services provider, FS has been actively working with our customers to help them plan for, adapt to, and overcome the supply chain challenges, hoping that we can both ride out this chip shortage crisis. At least, we cannot lose hope, as advised by Bill Wyckoff, vice president at technology equipment provider SHI International, “This is not an ‘all is lost’ situation. There are ways and means to keep your equipment procurement and refresh plans on track if you work with the right partners.”

Article Source: Impact of Chip Shortage on Datacenter Industry

Related Articles:

The Chip Shortage: Current Challenges, Predictions, and Potential Solutions

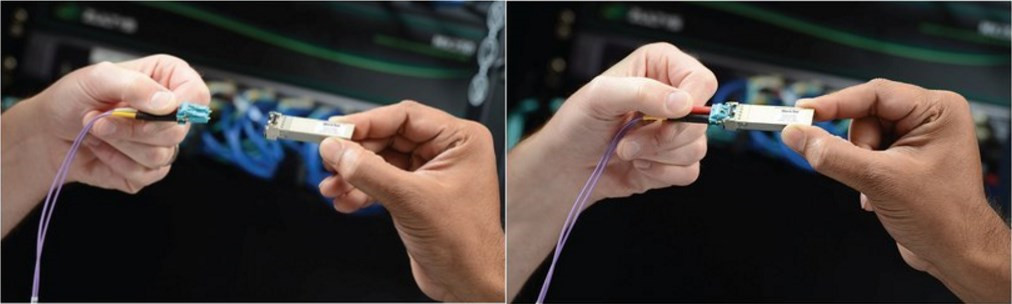

As fiber deployment has become mainstream, splicing has naturally crossed from the outside plant (OSP) world into the enterprise and even the data center environment. Fusion splicing involves the use of localized heat to melt together or fuse the ends of two optical fibers. The preparation process involves removing the protective coating from each fiber, precise cleaving, and inspection of the fiber end-faces. Fusion splicing has been around for several decades, and it’s a trusted method for permanently fusing together the ends of two optical fibers to realize a specific length or to repair a broken fiber link. However, due to the high costs of fusion splicers, it has not been actively used by many people. But these years some improvements in optical technology have been changing this status. Besides, the continued demand for increased bandwidth also spread the application of fusion splicing.

As fiber deployment has become mainstream, splicing has naturally crossed from the outside plant (OSP) world into the enterprise and even the data center environment. Fusion splicing involves the use of localized heat to melt together or fuse the ends of two optical fibers. The preparation process involves removing the protective coating from each fiber, precise cleaving, and inspection of the fiber end-faces. Fusion splicing has been around for several decades, and it’s a trusted method for permanently fusing together the ends of two optical fibers to realize a specific length or to repair a broken fiber link. However, due to the high costs of fusion splicers, it has not been actively used by many people. But these years some improvements in optical technology have been changing this status. Besides, the continued demand for increased bandwidth also spread the application of fusion splicing.