SDN, short for Software-Defined Networking, is a networking architecture that separates the control plane from the data plane. It involves decoupling network intelligence and policies from the underlying network infrastructure, providing a centralized management and control framework.

How does Software-Defined Networking (SDN) Work?

SDN operates by employing a centralized controller that manages and configures network devices, such as switches and routers, through open protocols like OpenFlow. This controller acts as the brain of the network, allowing administrators to define network behavior and policies centrally, which are then enforced across the entire network infrastructure.SDN network can be classified into three layers, each of which consists of various components.

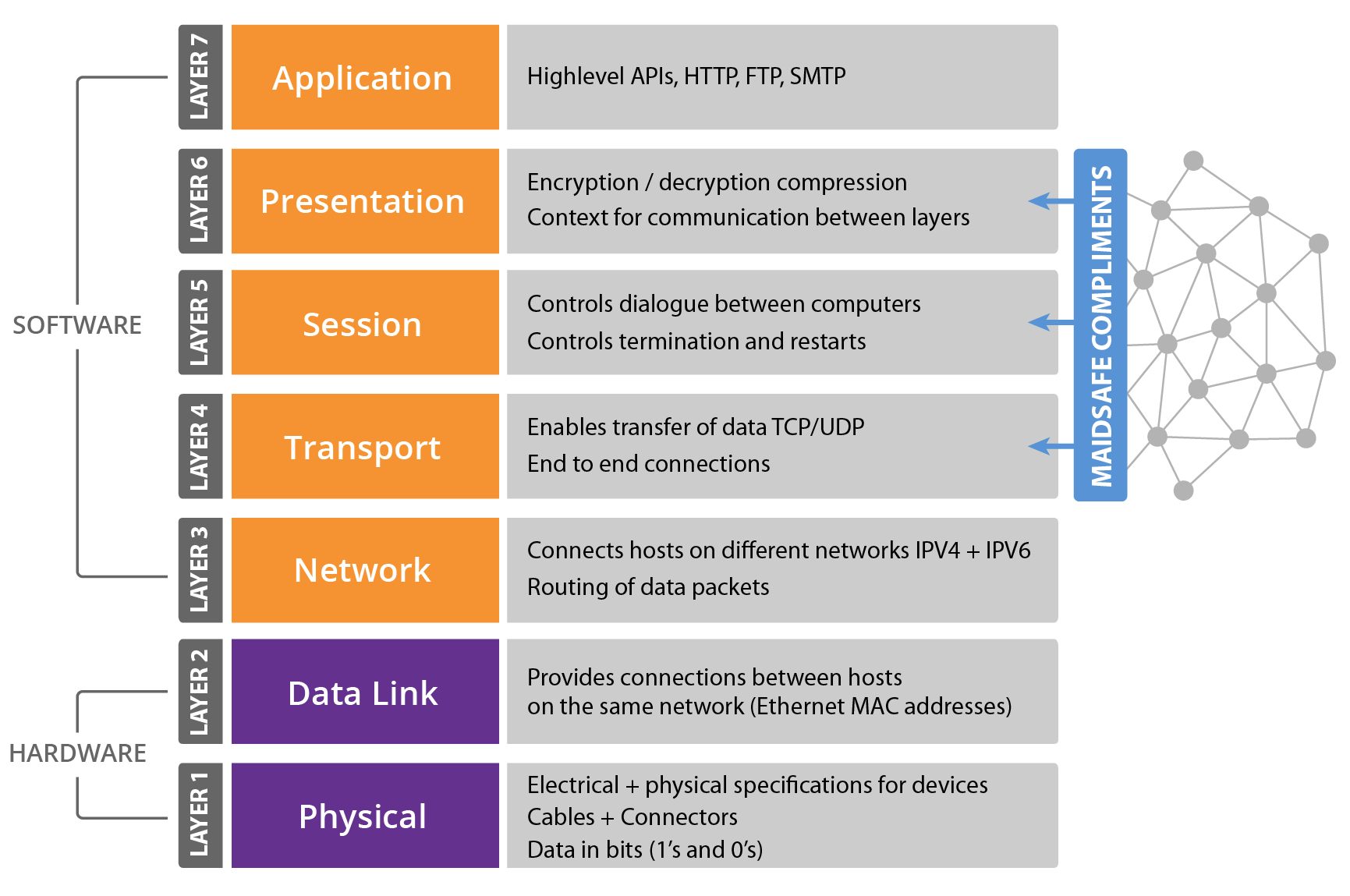

- Application layer: The application layer contains network applications or functions that organizations use. There can be several applications related to network monitoring, network troubleshooting, network policies and security.

- Control layer: The control layer is the mid layer that connects the infrastructure layer and the application layer. It means the centralized SDN controller software and serves as the land of control plane where intelligent logic is connected to the application plane.

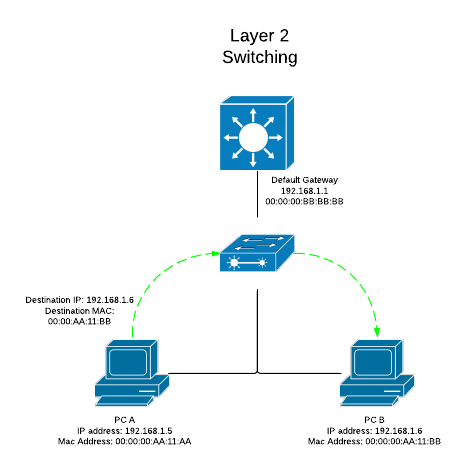

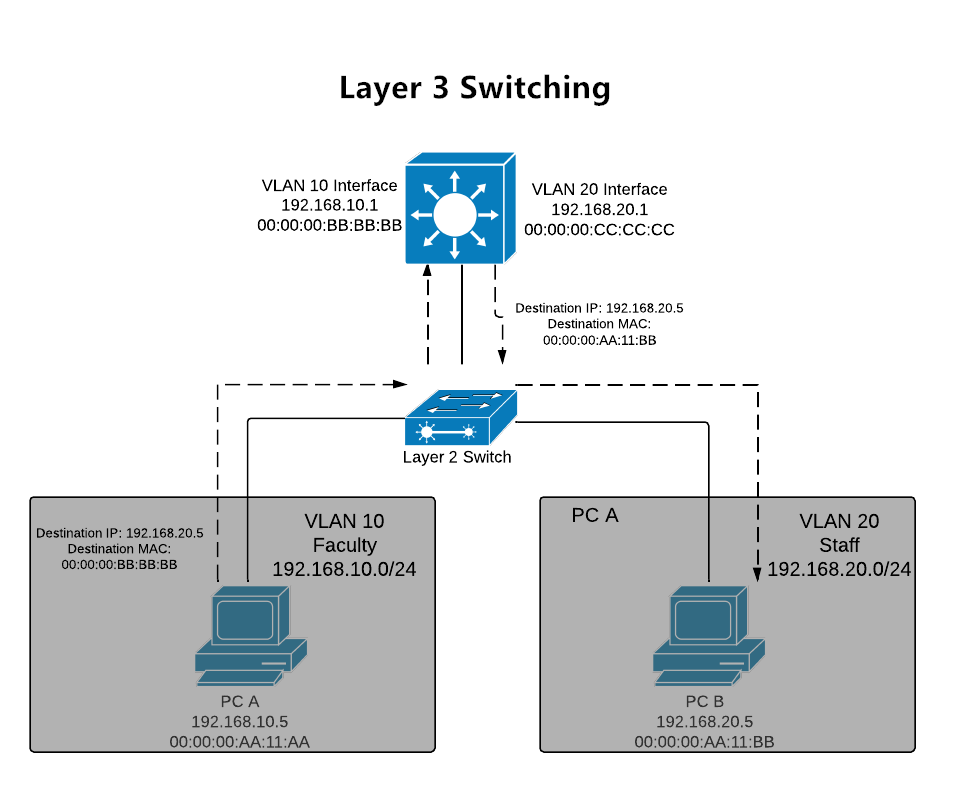

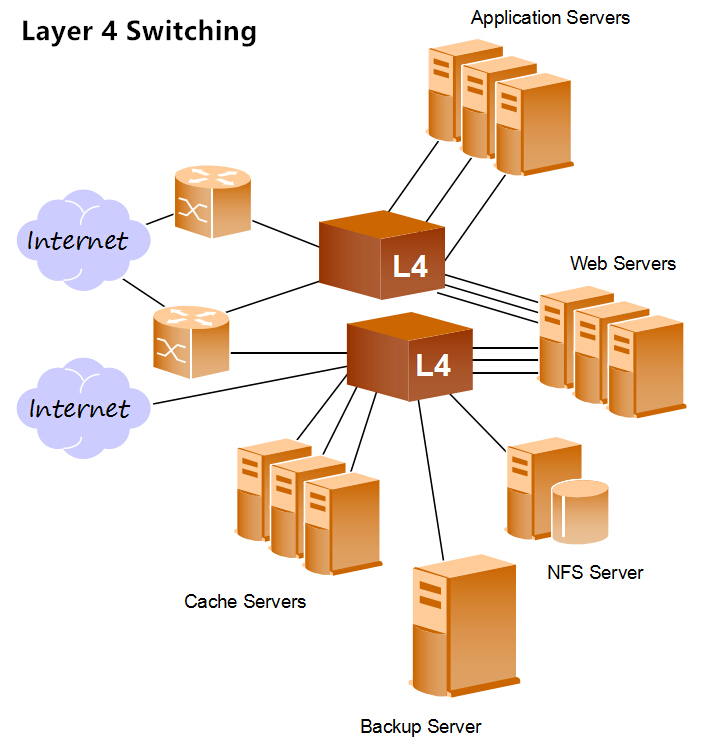

- Infrastructure layer: The infrastructure layer consists of various networking equipment, for instance, network switches, servers or gateways, which form the underlying network to forward network traffic to their destinations.

To communicate between the three layers of SDN network, northbound and southbound application programming interfaces (APIs) are used. Northbound API enables communications between the application layers and the controller, while southbound API allows the controller communicate with the networking equipment.

What are the Different Models of SDN?

Depending on how the controller layer is connected to SDN devices, SDN networks can be divided into four different types which we can classify as follows:

- Open SDN

Open SDN has a centralized control plane and uses OpenFlow for the southbound API of the traffic from physical or virtual switches to the SDN controller.

- API SDN

API SDN, is different from open SDN. Rather than relying on an open protocol, application programming interfaces control how data moves through the network on each device.

- Overlay Model SDN

Overlay model SDN doesn’t address physical netwroks underneath but builds a virtual network on top of the current hardware. It operates on an overlay network and offers tunnels with channels to data centers to solve data center connectivity issues.

- Hybrid Model SDN

Hybrid model SDN, also called automation-based SDN, blends SDN features and traditional networking equipment. It uses automation tools such as agents, Python, etc. And components supporting different types of OS.

What are the Advantages of SDN?

Different SDN models have their own merits. Here we will only talk about the general benefits that SDN has for the network.

- Centralized Management

Centralization is one of the main advantages granted by SDN. SDN networks enable centralized management over the network using a central management tool, from which data center managers can benefit. It breaks out the barrier created by traditional systems and provides more agility for both virtual and physical network provisioning, all from a central location.

- Security

Despite the fact that the trend of virtualization has made it more difficult to secure networks against external threats, SDN brings massive advantages. SDN controller provides a centralized location for network engineers to control the entire security of the network. Through the SDN controller, security policies and information are ensured to be implemented within the network. And SDN is equipped with a single management system, which helps to enhance security.

- Cost-Savings

SDN network lands users with low operational costs and low capital expenditure costs. For one thing, the traditional way to ensure network availability was by redundancy of additional equipment, which of course adds costs. Compared to the traditional way, a software-defined network is much more efficient without the need to acquire more network switches. For another, SDN works great with virtualization, which also helps to reduce the cost for adding hardware.

- Scalability

Owing to the OpenFlow agent and SDN controller that allow access to the various network components through its centralized management, SDN gives users more scalability. Compared to a traditional network setup, engineers are provided with more choices to change network infrastructure instantly without purchasing and configuring resources manually.

In conclusion, in modern data centers, where agility and efficiency are critical, SDN plays a vital role. By virtualizing network resources, SDN enables administrators to automate network management tasks and streamline operations, resulting in improved efficiency, reduced costs, and faster time to market for new services.

SDN is transforming the way data centers operate, providing tremendous flexibility, scalability, and control over network resources. By embracing SDN, organizations can unleash the full potential of their data centers and stay ahead in an increasingly digital and interconnected world.

Related articles: Open Source vs Open Networking vs SDN: What’s the Difference